Across the world billions of dollars are committed every year for new public transport and road infrastructure projects: commuter rail, subways, new roads, bypasses, tunnels, bridges, etc. Committees spend years planning these projects; it often takes more than a decade until a project is implemented. Once completed, we expect the projects to yield their benefits over many, many decades. Over the last century planning and estimation processes have been refined; they work reasonably well. Unfortunately, current processes can not and do not take self-driving vehicles into account. But it is now clear that self-driving cars will fundamentally change our traffic patterns. This greatly increases the risk that public transport projects will already be obsolete at the time they are completed. In the following we will show that the most adequate action for cities and states is a temporary moratorium on new public transport projects (i.e. by systematically delaying the start of the planning phase):

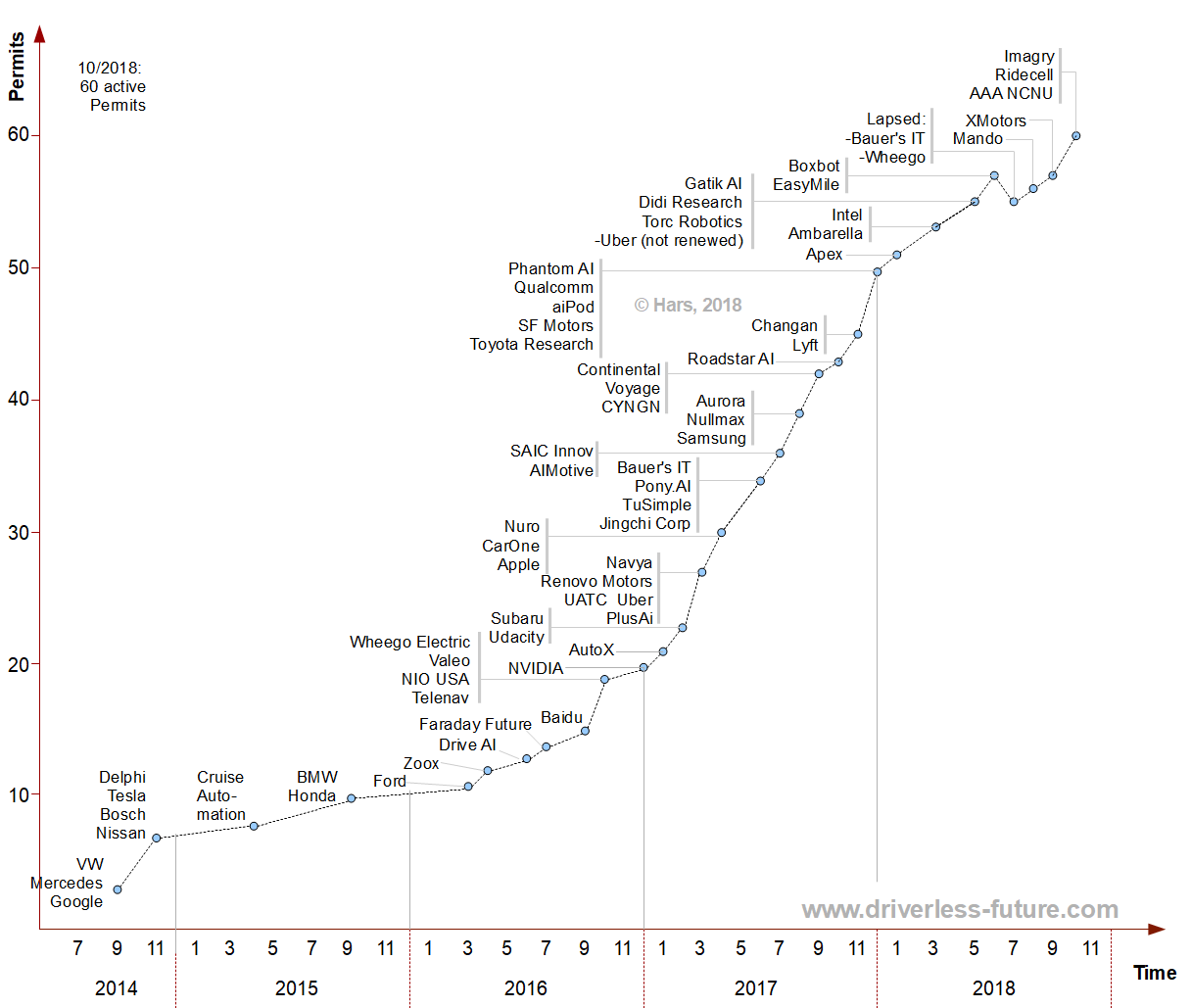

At the current point in time self-driving car technology is not yet ready for widespread adoption but there can no longer by any doubt about its viability. Many companies are racing for implementation. Millions of kilometers are now routinely test-driven in self-driving vehicles; GM and Jaguar have started producing self-driving car models; Waymo is now operating self-driving cars without test-drivers inside the car. Anyone who performs an extensive analysis about the size of the self-driving car problem, the economic incentives for participants in the self-driving car space and the state of the industry must come to the conclusion that we are very likely to have large numbers of self-driving cars, buses, trucks and machines in our cities within the next decade (see the postscript of this article for a brief outline of key elements of such an analysis).

Once self-driving cars operate in cities by the thousands, we will see fundamental changes: the number of privately owned cars will fall. The higher urban density, the quicker car ownership will recede and with it parking lots. Traditional public transport will be challenged by self-driving taxis and ridesharing services. Rail-based transport solutions will suffer from their inflexibility compared to buses. The biggest problems will occur on the feeder lines; not so much on the high-capacity, high-frequency core lines. Urban and highway traffic will flow better as self-driving cars become life traffic sensors and city-wide traffic routing algorithms are applied (no, this is not science fiction, this will be a core, immanent concern of any provider of self-driving mobility services and has the benefit of being a win-win situation (identical goals) with city traffic management). We will see the distribution of traffic change significantly as trucks begin to operate 24/7, self-driving fleet vehicles are applied for delivery at night and ridesharing services increase the average occupancy per vehicle on certain routes (more likely on long distance trips as well as long commutes, less relevant for inner cities). As a consequence our road-based mobility system will change fundamentally. Of course, this will not occur over night, but the changes will greatly affect any new road infrastructure project being planned today.

Ideally, we would map out these changes today and then plan for the kind of mobility system which will be operating in the forties and fifties of this century. But there is too much uncertainty and too little chance to achieve widespread agreement on what this situation will look like. There is no established knowledge, and no agreement on how to determine the likely scenarios.

But even if we cannot yet reach agreement on what the future will look like, it should be possible to reach agreement that this future will be very, very different from the one we are planning for today. Given the uncertainties, there are three possibilities:

1) Continue planning on the basis of our current processes and knowledge.

2) Delay projects for a few years, hoping for improvements in our understanding of the effects of SDC technology

3) Design new projects with robustness or elements targeted for self-driving car scenarios

The conditions under which alternative 1 is rational are very narrow: This only makes sense for projects which are unlikely to be challenged significantly by self-driving cars. New rail-based projects certainly do not fall int his category. But bypasses, highway extensions (or new highways) and most other projects also critically depend on estimates of traffic distributions which we can no longer extrapolate from today. Therefore we must balance the disadvantages of delaying the start of such a project for a few years against the advantages of fewer expenses in the near- and medium term and possibly a better system in the long term. Because we are likely to have much better ways of managing traffic flow in 20 years it is unlikely that the congestion problems which we may fear as a result of delaying a project today will actually materialize. If we do business as usual, we may find in 20 years time that a significant share of the projects we are starting today are no longer necessary and billions of dollars have been wasted.

Given the state of our knowledge it appears difficult to design robustness for self-driving car scenarios (Alternative 3) into new projects today.

Thus the only viable option is Alternative 2. If we delay new projects for a few years, we save tax payer money but don’t have to fear enormous congestion in a few decades because self-driving car technology will give us many new levers for improving traffic flow. By delaying projects, we will increase our common knowledge and shared understanding of the impact of self-driving cars. Simulations, scientific research, experiences from the first installations of fleest of self-driving cars (such as Waymo in Phoenix) will provide us with insights that we can apply for the planning and estimation of new public transport and road infrastructure projects. We will learn how traffice changes in the first cities with self-driving cars; we will understand that fleets have an impact on the way that traffic is routed and that we can use them to detect and combat congestion. We will be much more open to consider introducing new parameters into our mobility infrastructure: some lanes might be dedicated to self-driving cars only; they could be made narrower because these cars can drive with more precision. We might change the direction of inner lanes on some roads depending on travel flows or revert parking lots on the side to be used as lanes during peak hours (only self-driving vehicles would be permitted to park there at night; they would be in use during the day or have to drive themselves to another parking space before peak hours begin).

Thus at this point, the most rational approach for new public transport and road infrastructure projects is to put the initiative on hold! This is an action for which a consensus can be found much more easily among the various stakeholders than finding consensus to plan directly for an unknown future with self-driving cars. It also has the side-benefit of increasing the pressure on the planners to seriously consider the effects of self-driving cars. We will all be better off if we place a moratorium on new public transport and road infrastructure projects today!

Postscript:

A key element of the argument presented in this article is the claim that we are likely to have very many self-driving cars, buses, trucks and machines in our cities within the next decade. This is not obvious and runs counter to the quick, intuitive assessment of many. Unfortunately, the matter is complex and requires an intensive look at the issues from multiple perspectives – technical, economic, legal, innovation theoretic. Misleading intuitions can not be eradicated with just a few sentences because they are usually based on too many self-supporting half-truths (see my paper on Misconceptions of self-driving cars). If you want to spend time to think the different aspects through, here is a brief outline of some of the issues (for more on this, attend one of my workshops or contact me directly):

1. Technology

1.1. Problem of full self-driving has been shown as solvable.

1.1.1. Problem is inherently information processing in a limited, but complex domain. Interpretation is hard but can be solved with current methods.

1.1.2. Known limitations (driving in snow / heavy rain etc.) are not fundamental limitations

1.1.3. Self-driving car does not need general world (human-like) intelligence.

1.1.4. Remote operations center can handle many of the so-called hard problems (i.e. interpreting police office hand waves)

1.1.5. Having to use pre-defined maps is not a limitation for nationwide rollout (and nationwide rollout will not be the initial use-case anyway)

1.2. Self-driving cars will reach a state where they are much safer than the average human driver

1.2.1. Much better attention than human drivers

1.2.2. Larger field of view than human drivers (exception: highways)

1.2.3. Fast, continous learning and refinement of algorithms.

1.2.4. Human drivers make many preventable accidents.

1.2.5. Human is better at interpreting certain rare scenes

1.2.6. Self-driving cars are better at detecting common situations early

1.2.7. Vehicles have sufficient processing power and sensor mix for self-driving

1.2.8. Economic usefulness of SDC technology does not require ability to operate everywhere (-> technology can start early)

1.3. Rapid evolution of technology

1.3.1. Innovation process is spread across the world; involves many companies in hardware, sensors, software, mobility, etc.

1.3.2. Enormous progress in AI algorithms

1.3.3. Sensor mix is maturing; still rapid innovation in sensor technology and rapid fall of sensor and hardware prices

1.3.4. Number of companies working on self-driving cars still increasing

1.3.5. Production of first self-driving car models has already started (GM/Jaguar/not quite there yet: Tesla)

2. Economics

2.1. Disruptive potential of self-driving cars is eliminating the driver

2.1.1. All industries potentially affected from impact on logistics

2.1.2. SDC technology challenges competitive position of many companies (not just auto industry) and countries -> enormous competitive pressure

2.1.3. Removing the need for a driver requires rethinking all processes in the auto / transport / mobility industry

2.1.4. Diffusion of self-driving cars at a much faster pace than other auto industry innovations (won’t take decades)

2.2. SDCs will lead to increased use of mobility as a service

2.2.1. Car ownership must fall (a detailed analysis of cost/benefit/comfort associated with owning a car / calling a self-driving taxi)

2.2.2. Self-driving taxis will slash costs for individual motorized mobility (but costs for privately owned SDCs will rise compared to current cars)

2.2.3. Vehicle stock in developed nations will fall significantly

2.2.4. Mobility as a service market exhibits network effects -> first mover advantage means winner gets all -> extreme race for being first

2.2.5. Regulation of SDC fleets by cities or countries is very likely

2.2.6. Public transport will face significant challenges from providers of self-driving mobility services

2.2.7. Rail-based networks are at a disadvantage because of their low flexibility. Only high-capacity lines can remain profitable.

2.2.8. SDCs will increase throughput in cities; increased congestion very unlikely (this is contrary to many intuitions)

2.3. SDCs will increase person-kilometers traveled but not necessarily vehicle-kilometers traveled (impacted by occupancy rate)

2.3.1. Mobility services are not just economically viable in high density urban areas but also in many lower density rural areas (of the United States)

2.3.2. Occupancy rate for long-distance trips and longer commutes will increase

2.3.3. In short, local travel passengers will be reluctant to share rides; buses will be preferred compared to taxis for ridesharing

2.3.4. Self-driving long distance buses will multiply

2.4. High valuation of SDC-related businesses

2.4.1. Enormous capital inflow for all business related to self-driving car technology because of high potential gains associated with market shakeups

2.4.2. High-priced human capital in self-driving car technology; rapid movement between companies (rapid transfer for knowledge from leaders to well funded followers)

2.4.3. Number of cars sold will fall. OEMs will loose significant revenue. Not all OEMs will be able to survive this transition of the industry.

2.4.4. Auto industry will change. Fleets will be powerful customers and heavily influence vehicle design.

2.4.5. SDCs will slash delivery costs.

2.4.6. Ecommerce will grow. Retail will suffer. Supermarkets will close.

3. Legal/political

3.1. Self-driving car technology seen as key technology affecting global balance of economic and military power

3.1.1. Countries compete to grow/protect their own self-driving car technology and related industries

3.1.2. Opposition to SDCs does not have force. Risk of job loss widely acknowledged but potential benefits to population as a whole are too large

3.1.3. Regulatory bodies have a lack of knowledge and competence in rapidly evolving SDC technology;

3.1.4. If perceived as necessary, regulatory approval for self-driving cars may occur rapidly

3.1.5. Very little attention yet on the wider regulatory implications of self-driving cars (in cities, as a business model, as a universal service, as a competitor to public transport etc.)

4. Innovation

4.1. Innovation process

4.1.1. Self-driving car technology close to end of fluid first phase of disruptive innovation processes

4.1.2. Shakeout and consolidation in the SDC technology likely to be observable soon

4.1.3. Self-driving car hard- and software likely to become commoditized. Not a source of long-term competitive advantage.

4.2. Diffusion

4.2.1. Self-driving car technology will be adapted very rapidly. First mover advantage for fleets. Heavy demand by affluent consumers expected.

4.2.2. Catalyst for innovation in other areas. Transport, retail, autonomous machines and solutions.

4.2.3. Enabler for electric vehicles. Self-driving cars will greatly increase not just the number of electric vehicles but explode the number of person kilometers traveled through self-driving electric (fleet) vehicles

4.3. New technology leads to new possibilities which will be discovered, embraced, regulated, mandated

4.3.1. Self-driving vehicles will work as traffic sensors

4.3.2. Self-driving vehicles can be used to influence and control traffic

4.3.3. Fleets of self-driving vehicles lead to a much better real-time understanding of traffic

4.3.4. Congestion-causing effect of a trip can be determined, quantified, taxed etc.

4.3.5. Building codes will reduce requirements for number of parking lots

4.4. Changes in attitudes

4.4.1. Human error in driving will no longer be tolerated (does not mean no more human driving, could just mean that SDC algorithms oversee human driving)

4.4.2. Personal car ownership may be banned in some inner cities

4.4.3. Preferences for privately owning a car may fall

4.4.4. If global warming becomes more of a threat car ownership may be increasingly viewed critical given ubiquitous access to mobility services